Pitching your talk and listening to other amazing people

The #uxcampcph logo. Image attributed to: http://uxcampcph.org/Uploads/UXCampCPH_HVID_transparant.png

This is a continuation of an earlier blog about my experiences at UX Camp CPH 2015 with a focus on the topics presented there.

In a blog last week I tried to explain what lean stands for in a broader sense and to relate the concept to an event that I recently attended. UX Camp Copenhagen is a forum organized in a lean fashion and had Jeff Gothelf, the father of “Lean UX”, as its keynote speaker. In this blog I would like to share a bit more about the conference, the topics I attended and the one that I offered to the other attendants there. I will start with the Friday night to set the mood, continue with a break-the-ice session Saturday morning, followed by the attendant-generated content and end up with Jeff’s closing keynote on Lean UX.

Setting the mood

Friday night began with three invited speakers, who offered very different topics. First, Jonas Priesum from theeyetribe talked about eye-tracking, the science behind it and its related problems, such as how users might visually select items on-screen. Of course, the inevitable discussion of “blink to select” and “dwell to select” spiced up the discussion but it all ended up with a nice overview of the empowering potential of the technology for the hospitalized and the disabled.

Next it was time for Johan Knattrup to talk about the interactive movie experience that his team created using Oculus Rift, called Skammekrogen. They basically directed a 20-minute immersive movie experience that could be lived through the eyes of one of the actors through the use of a virtual reality headset. What was particularly interesting was how their initial screening of the film seemed to doom the whole concept. Movie viewers failed to feel very “immersed” in one particular character. They actually felt alienated throughout the movie when in the shoes of that particular actor. Initially the team’s understanding was that they failed to achieve immersion and all their shooting and directing efforts were in vain. But after more in-depth analysis of their script, they realized that it was actually written such that that this particular character was distant to everyone else. This, it turns out, immersed movie viewers beyond everyone’s initial expectations.

The final speaker of the night was Thomas Madsen-Mygdal, ex-chairman of podio, who spoke about belief. According to Madsen-Mygdal, belief in something is a choice and belief in the power of the Internet 20 years ago was what drove humanity forward. He also suggested that those who ultimately succeed in life are those who believe in seemingly unattainable long-term goals – particularly when the odds are against them. Perhaps the most important thing that got stuck in my mind was the notion of belief as “the most important design tool in life”.

Johan Knattrup to the left and Thomas Madsen-Mygdal to the right setting the mood on Friday night. Image attributed to the author.

My take on the whole of Friday night was that I was in the right place. No matter if I were more of a researcher, or an artist, or a philosophical type of person, this was the place and the time for anyone to share anything they were passionate about, regardless of how crazy it might seem.

Breaking the ice

Saturday morning brought to us a hidden gem with Ida Aalen’s talk about The Core Model. I particularly loved the way she “killed” the homepage-first design approach by showing that most of the time we end up on a child page from a Google search or by following a link shared in social media. And if we think about it for a second she is absolutely correct; we rarely see the homepage even if we explored some of the IA of a given website. The framework that she extensively uses and promotes, called The Core Model, is definitely one of the things that I cannot wait to put into practice in my upcoming design challenges.

Talks from the people and for the people

Luckily, all who pitched talks managed to find a slot on the schedule. This highlighted the impressive efforts of the organizers because 27 of us each had thirty seconds, one after another. Once the schedule was ready, I decided to spend my first slot with Nanna and the rest of Think! Digital in a discussion about designing with and for the actual content. We spoke about the importance of getting actual content as early as possible and prototype with it instead of the “Lorem ipsum…” that is so familiar to the design world. Having content early means we decrease the probability that a piece of content will ruin our layout later in the project. Rather, the content becomes a design constraint known from the very beginning.

My second slot was spent with Pascal from ustwo in London. It was probably the most anticipated talk of the day after an amazing pitch and he definitely kept his promise. Pascal spoke about the digital identity that we create through all our gadgets, how they quantify us and the implications of this journal of our life (e.g., ownership, privacy and longevity) as these journals are very likely to outlive us.

The third session on my list was with Steven from Channel 4, another speaker from the UK. He talked about their design process, involving experience maps and user journeys, taking as a case study the launch of his company’s “On Demand” product.

Doing my part

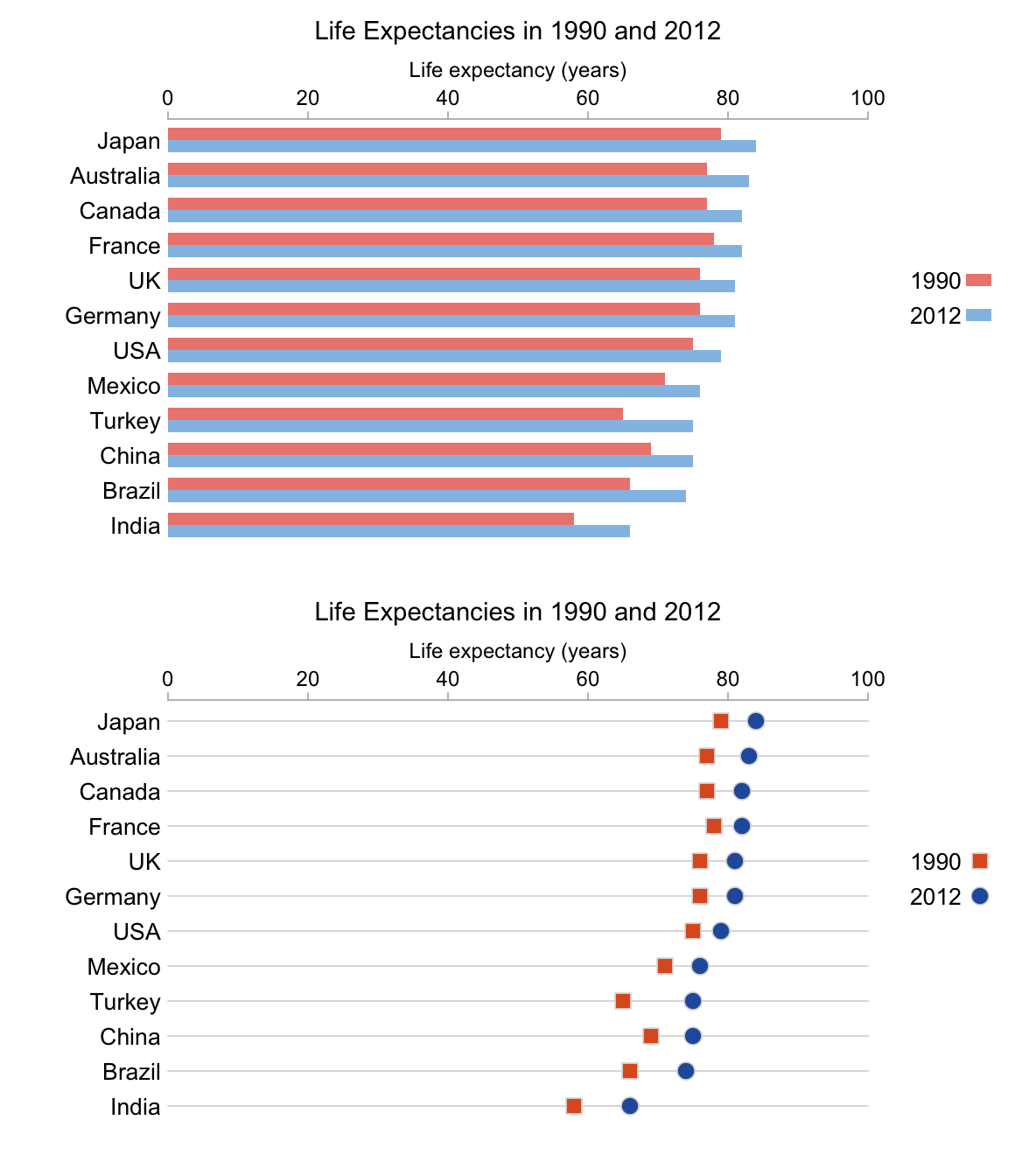

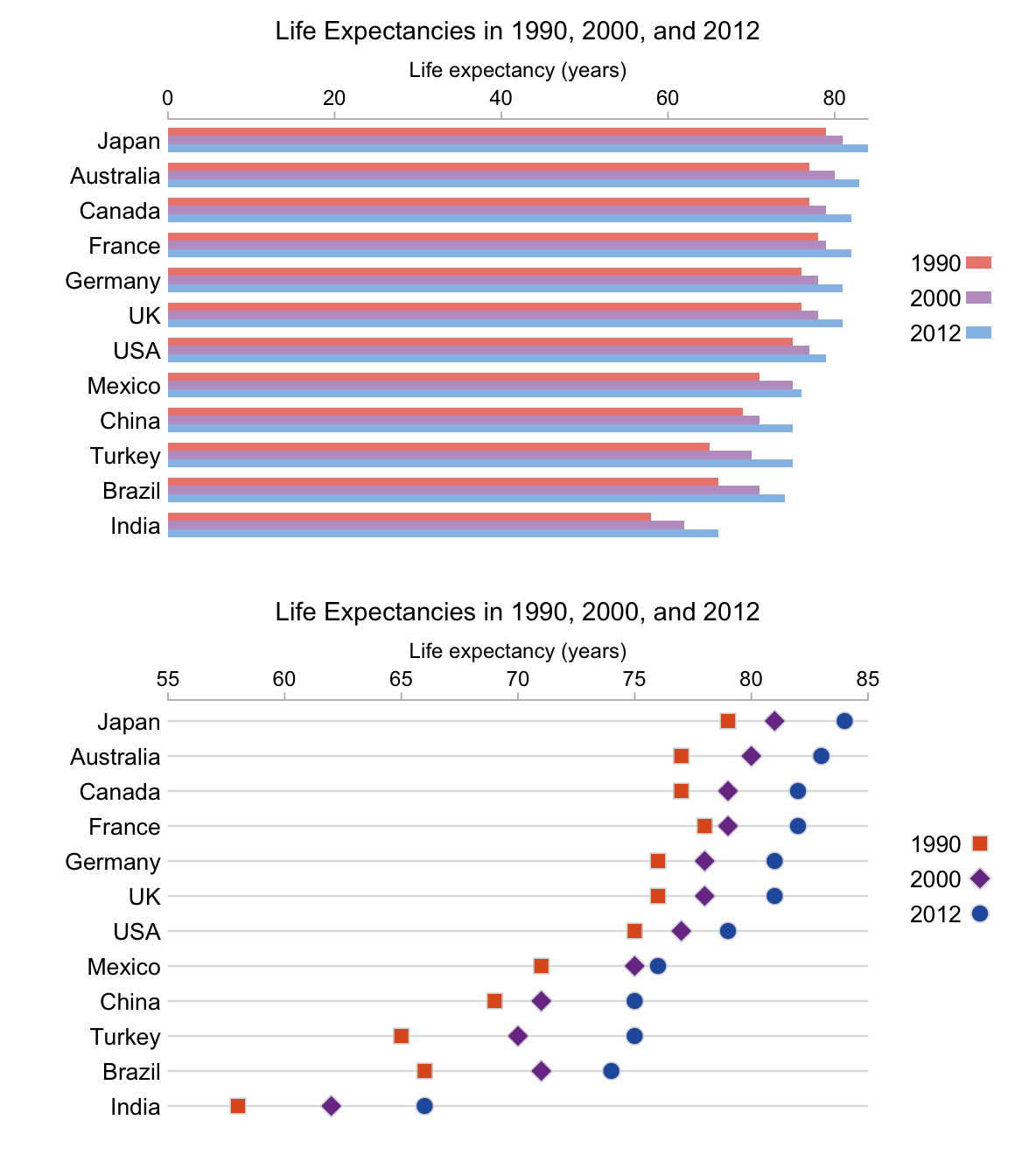

At the end of the day it was time for the talk that I had prepared: “Designing Usable Dashboards”. I picked that topic for two reasons. Firstly, we at Infragistics know how to design usable dashboards. We have demonstrated that on a number of occasions such as the Xamarin Auto Sales Dashboard, Marketing Dashboard, Finance Stocks App, and CashFlow Dashboard to note just some of our latest work. Secondly, I was really inspired by the webinar, How To Design Effective Dashboards, recently presented by Infragistics Director of Design, Tobias Komischke. Despite the fact that my slides had a researcher’s approach to data visualization, the lengthy discussion at the end of the talk left me with the feeling that it quenched the thirst of the crowd for the topic.

Designing Usable Dashboards presentation by the author. Image attributed to the author.

The icing on the cake

There was only one thing standing between us and the beer in the bar, signifying the end of such community-driven forums. It was what turned out to be inarguably the best talk of the whole event – Jeff Gothelf and Lean UX. Originally from New Jersey, where Infragistics’ headquarters are located, he shared his struggle to create a design team in a NYC startup. A team that had to work with the agile software development already established in the company. Jeff shared the ups and downs along the way, and the birth of what he eventually coined “the Lean UX approach”. He spoke about continuous feedback loops, conversations as a core communication medium and the importance of learning and change. He also spoke about how it is crucial to learn whether your assumptions are valid by testing a hypothesis with minimal effort, as quickly as possible. And that once you are better informed, you have to be willing to change and iterate to progress your product forward.

Jeff Gothelf talking about lean UX. Image attributed to the author.

UX Camp Copenhagen, thank you once again for the great event and it was really a pleasure to be part of it. Hope to see you again next year.